co-authored-by: Frederick Borges <fborges@opennebula.io>

co-authored-by: Neal Hansen <nhansen@opennebula.io>

co-authored-by: Daniel Clavijo Coca <dclavijo@opennebula.io>

co-authored-by: Pavel Czerný <pczerny@opennebula.systems>

BACKUP INTERFACE

=================

* Backups are exposed through a a special Datastore (BACKUP_DS) and

Image (BACKUP) types. These new types can only be used for backup'ing

up VMs. This approach allows to:

- Implement tier based backup policies (backups made on different

locations).

- Leverage access control and quota systems

- Support differnt storage and backup technologies

* Backup interface for the VMs:

- VM configures backups with BACKUP_CONFIG. This attribute can be set

in the VM template or updated with updateconf API call. It can include:

+ BACKUP_VOLATILE: To backup or not volatile disks

+ FS_FREEZE: How the FS is freeze for running VMs (qemu-agent,

suspend or none). When possible backups are crash consistent.

+ KEEP_LAST: keep only a given number of backups.

- Backups are initiated by the one.vm.backup API call that requires

the target Datastore to perform the backup (one-shot). This is

exposed by the onevm backup command.

- Backups can be periodic through scheduled actions.

- Backup configuration is updated with one.vm.updateconf API call.

* Restore interface:

- Restores are initiated by the one.image.restore API call. This is

exposed by oneimage restore command.

- Restore include configurable options for the VM template

+ NO_IP: to not preserve IP addresses (but keep the NICs and network

mapping)

+ NO_NIC: to not preserve network mappings

- Other template attributes:

+ Clean PCI devices, including network configuration in case of TYPE=NIC

attributes. By default it removes SHORT_ADDRESS and leave the "auto"

selection attributes.

+ Clean NUMA_NODE, removes node id and cpu sets. It keeps the NUMA node

- It is possible to restore single files stored in the repository by

using the backup specific URL.

* Sunstone (Ruby version) has been updated to expose this feautres.

BACKUP DRIVERS & IMPLEMENTATION

===============================

* Backup operation is implemented by a combination of 3 driver operations:

- VMM. New (internal oned <-> one_vmm_exec.rb) to orchestrate

backups for RUNNING VMs.

- TM. This commit introduces 2 new operations (and their

corresponding _live variants):

+ pre_backup(_live): Prepares the disks to be back'ed up in the

repository. It is specific to the driver: (i) ceph uses the export

operation; (ii) qcow2/raw uses snapshot-create-as and fs_freeze as

needed.

+ post_backup(_live): Performs cleanning operations, i.e. KVM

snapshots or tmp dirs.

- DATASTORE. Each backup technology is represented by its

corresponfing driver, that needs to implement:

+ backup: it takes the VM disks in file (qcow2) format and stores it

the backup repository.

+ restore: it takes a backup image and restores the associated disks

and VM template.

+ monitor: to gather available space in the repository

+ rm: to remove existing backups

+ stat: to return the "restored" size of a disk stored in a backup

+ downloader pseudo-URL handler: in the form

<backup_proto>://<driver_snapshot_id>/<disk filename>

BACKUP MANAGEMENT

=================

Backup actions may potentially take some time, leaving some vmm_exec threads in

use for a long time, stucking other vmm operations. Backups are planned

by the scheduler through the sched action interface.

Two attributes has been added to sched.conf:

* MAX_BACKUPS max active backup operations in the cloud. No more

backups will be started beyond this limit.

* MAX_BACKUPS_HOST max number of backups per host

* Fix onevm CLI to properly show and manage schedule actions. --schedule

supports now, as well as relative times +<seconds_from_stime>

onvm backup --schedule now -d 100 63

* Backup is added as VM_ADMIN_ACTIONS in oned.conf. Regular users needs

to use the batch interface or request specific permissions

Internal restructure of Scheduler:

- All sched_actions interface is now in SchedActionsXML class and files.

This class uses references to VM XML, and MUST be used in the same

lifetime scope.

- XMLRPC API calls for sched actions has been moved to ScheduledActionXML.cc as

static functions.

- VirtualMachineActionPool includes counters for active backups (total

and per host).

SUPPORTED PLATFORMS

====================

* hypervisor: KVM

* TM: qcow2/shared/ssh, ceph

* backup: restic, rsync

Notes on Ceph

* Ceph backups are performed in the following steps:

1. A snapshot of each disk is taken (group snapshots cannot be used as

it seems we cannot export the disks afterwards)

2. Disks are export to a file

3. File is converted to qcow2 format

4. Disk files are upload to the backup repo

TODO:

* Confirm crash consistent snapshots cannot be used in Ceph

TODO:

* Check if using VM dir instead of full path is better to accomodate

DS migrations i.e.:

- Current path: /var/lib/one/datastores/100/53/backup/disk.0

- Proposal: 53/backup/disk.0

RESTIC DRIVER

=============

Developed together with this feature is part of the EE edtion.

* It supports the SFTP protocol, the following attributes are

supported:

- RESTIC_SFTP_SERVER

- RESTIC_SFTP_USER: only if different from oneadmin

- RESTIC_PASSWORD

- RESTIC_IONICE: Run restic under a given ionice priority (class 2)

- RESTIC_NICE: Run restic under a given nice

- RESTIC_BWLIMIT: Limit restic upload/download BW

- RESTIC_COMPRESSION: Restic 0.14 implements compression (three modes:

off, auto, max). This requires repositories version 2. By default,

auto is used (average compression without to much CPU usage)

- RESTIC_CONNECTIONS: Sets the number of concurrent connections to a

backend (5 by default). For high-latency backends this number can be

increased.

* downloader URL: restic://<datastore_id>/<snapshot_id>/<file_name>

snapshot_id is the restic snapshot hash. To recover single disk images

from a backup. This URLs support:

- RESTIC_CONNECTIONS

- RESTIC_BWLIMIT

- RESTIC_IONICE

- RESTIC_NICE

These options needs to be defined in the associated datastore.

RSYNC DRIVER

=============

A rsync driver is included as part of the CE distribution. It uses the

rsync tool to store backups in a remote server through SSH:

* The following attributes are supported to configure the backup

datastore:

- RSYNC_HOST

- RSYNC_USER

- RSYNC_ARGS: Arguments to perform the rsync operatin (-aS by default)

* downloader URL: rsync://<ds_id>/<vmid>/<hash>/<file> can be used to recover

single files from an existing backup. (RSYNC_HOST and RSYN_USER needs

to be set in ds_id

EMULATOR_CPUS

=============

This commit includes a non related backup feature:

* Add EMULATOR_CPUS (KVM). This host (or cluster attribute) defines the

CPU IDs where the emulator threads will be pinned. If this value is

not defined the allocated CPU wll be used when using a PIN policy.

(cherry picked from commit a9e6a8e000e9a5a2f56f80ce622ad9ffc9fa032b)

F OpenNebula/one#5516: adding rsync backup driver

(cherry picked from commit fb52edf5d009dc02b071063afb97c6519b9e8305)

F OpenNebula/one#5516: update install.sh, add vmid to source, some polish

Signed-off-by: Neal Hansen <nhansen@opennebula.io>

(cherry picked from commit 6fc6f8a67e435f7f92d5c40fdc3d1c825ab5581d)

F OpenNebula/one#5516: cleanup

Signed-off-by: Neal Hansen <nhansen@opennebula.io>

(cherry picked from commit 12f4333b833f23098142cd4762eb9e6c505e1340)

F OpenNebula/one#5516: update downloader, default args, size check

Signed-off-by: Neal Hansen <nhansen@opennebula.io>

(cherry picked from commit 510124ef2780a4e2e8c3d128c9a42945be38a305)

LL

(cherry picked from commit d4fcd134dc293f2b862086936db4d552792539fa)

|

||

|---|---|---|

| .github | ||

| include | ||

| share | ||

| src | ||

| .gitignore | ||

| install.sh | ||

| LICENSE | ||

| LICENSE.onsla | ||

| LICENSE.onsla-nc | ||

| NOTICE | ||

| package-lock.json | ||

| README.md | ||

| SConstruct | ||

Description

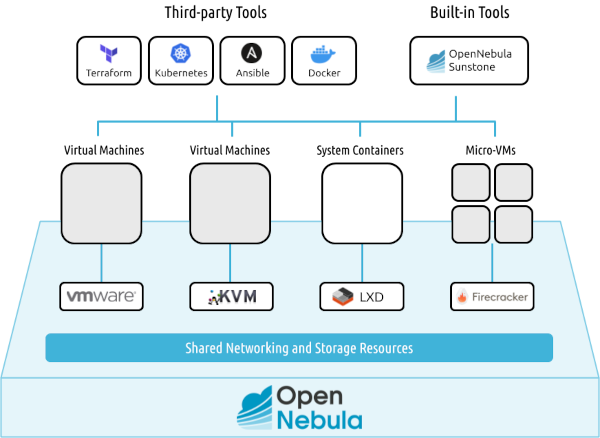

OpenNebula is an open source platform delivering a simple but feature-rich and flexible solution to build and manage enterprise clouds for virtualized services, containerized applications and serverless computing.

To start using OpenNebula

- Explore OpenNebula’s key features on our website.

- Have a look at our introductory datasheet.

- Browse our catalog of screencasts and video-tutorials.

- Download our technical white papers.

- See our Documentation.

- Join our Community Forum.

Contributing to OpenNebula

- Contribute to Development.

- Learn about our Add-on Catalog.

- Help us translate OpenNebula to your language.

- Report a security vulnerability.

Taking OpenNebula for a Test Drive

There are a couple of very easy ways to try out the OpenNebula functionality

- miniONE for infrastructures based on open source hypervisors.

- vOneCloud for VMware based infrastructures.

Installation

You can find more information about OpenNebula’s architecture, installation, configuration and references to configuration files in this documentation section.

It is very useful to learn where log files of the main OpenNebula components are placed. Also check the reference about the main OpenNebula daemon configuration file.

Front-end Installation

The Front-end is the central part of an OpenNebula installation. This is the machine where the server software is installed and where you connect to manage your cloud. It can be a physical node or a virtual instance.

Please, visit the official documentation for more details and a step-by-step guide. Using the packages provided on our site is the recommended method, to ensure the installation of the latest version, and to avoid possible package divergences with different distributions. There are two alternatives here: you can add our package repositories to your system, or visit the software menu to download the latest package for your Linux distribution.

If there are no packages for your distribution, please check the build dependencies for OpenNebula and head to the Building from Source Code guide.

Node Installation

After the OpenNebula Front-end is correctly set up, the next step is preparing the hosts where the VMs are going to run. Please, refer to the documentation site for more details.

Contact

License

Copyright 2002-2022, OpenNebula Project, OpenNebula Systems (formerly C12G Labs)

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.